- Pre-requisite

Concepts{#toc-pre-requisite-concepts}

- Runtime Analysis{#toc-runtime-analysis}

- Asymptotic Analysis (Big O){#toc-asymptotic-analysis-big-o}

- Amortized Analysis{#toc-amortized-analysis}

- Logarithmic Runtime{#toc-logarithmic-runtime}

- Computational Complexity

Theory{#toc-computational-complexity-theory}

- Complexity Classes{#toc-complexity-classes}

- NP Problems{#toc-np-problems}

- Reductions{#toc-reductions}

- Algorithm Design{#toc-algorithm-design}

- In-place algorithm{#toc-in-place-algorithm}

- Time–Space– tradeoff{#toc-timespace-tradeoff}

- Heuristics{#toc-heuristics}

- Hash Functions{#toc-hash-functions}

- Stack vs. Heap Memory Allocation{#toc-stack-vs.-heap-memory-allocation}

- Runtime Analysis{#toc-runtime-analysis}

- Data Structures and

ADTs{#toc-data-structures-and-adts}

- Lists and Arrays{#toc-lists-and-arrays}

- List{#toc-list}

- Arrays{#toc-arrays}

- Linked Lists{#toc-linked-lists}

- Skip Lists{#toc-skip-lists}

- Stacks and Queues{#toc-stacks-and-queues}

- Stacks{#toc-stacks}

- Queues{#toc-queues}

- Priority Queues{#toc-priority-queues}

- Indexed Priority Queues{#toc-indexed-priority-queues}

- Monotonic Stacks and Queues{#toc-monotonic-stacks-and-queues}

- Hash Tables{#toc-hash-tables}

- Dictionaries and Hash Tables{#toc-dictionaries-and-hash-tables}

- Sets{#toc-sets}

- Trees{#toc-trees}

- Binary Trees{#toc-binary-trees}

- Binary Heaps{#toc-binary-heaps}

- Tries (Prefix Trees){#toc-tries-prefix-trees}

- Suffix Trees/Arrays{#toc-suffix-treesarrays}

- Merkle Trees{#toc-merkle-trees}

- Kd-Trees{#toc-kd-trees}

- Segment Trees{#toc-segment-trees}

- Self-balancing

Trees{#toc-self-balancing-trees}

- AVL Trees{#toc-avl-trees}

- Red–black Trees{#toc-redblack-trees}

- 2-3 Trees and B-Trees{#toc-trees-and-b-trees}

- Graphs{#toc-graphs}

- Flow Networks{#toc-flow-networks}

- Union-Find{#toc-union-find}

- Lists and Arrays{#toc-lists-and-arrays}

- Algorithms and

Techniques{#toc-algorithms-and-techniques}

- Sequence Search and

Sort{#toc-sequence-search-and-sort}

- Binary Search{#toc-binary-search}

- Bubble Sort{#toc-bubble-sort}

- Selection Sort{#toc-selection-sort}

- Insertion Sort{#toc-insertion-sort}

- Merge Sort{#toc-merge-sort}

- QuickSort{#toc-quicksort}

- Heap Sort{#toc-heap-sort}

- Counting Sort{#toc-counting-sort}

- Radix Sort{#toc-radix-sort}

- Bucket Sort{#toc-bucket-sort}

- Cycle Sort{#toc-cycle-sort}

- Timsort{#toc-timsort}

- Array Analysis

Methods{#toc-array-analysis-methods}

- Two Pointer Technique{#toc-two-pointer-technique}

- Fast and Slow Pointers{#toc-fast-and-slow-pointers}

- Sliding Window Technique{#toc-sliding-window-technique}

- Single-pass with Lookup Table{#toc-single-pass-with-lookup-table}

- Prefix Sums and Kadane’s Algorithm{#toc-prefix-sums-and-kadanes-algorithm}

- Prefix Sums with Binary Search{#toc-prefix-sums-with-binary-search}

- Intervals{#toc-intervals}

- Range Operations on Array{#toc-range-operations-on-array}

- Merge Intervals{#toc-merge-intervals}

- String Analysis

Methods{#toc-string-analysis-methods}

- KMP Pattern Matching{#toc-kmp-pattern-matching}

- Rabin–Karp{#toc-rabinkarp}

- Non-Sequential Analysis with Stack{#toc-non-sequential-analysis-with-stack}

- Edit Distance{#toc-edit-distance}

- Heap Use Cases{#toc-heap-use-cases}

- K Largest or Smallest Numbers{#toc-k-largest-or-smallest-numbers}

- Two Heaps (Median of Data Stream){#toc-two-heaps-median-of-data-stream}

- Tree Traversal{#toc-tree-traversal}

- Graph Traversal{#toc-graph-traversal}

- Depth-First Search {#toc-depth-first-search}

- Breadth-First Search{#toc-breadth-first-search}

- Bidirectional Search{#toc-bidirectional-search}

- Dijkstra’s Shortest Path Algorithm{#toc-dijkstras-shortest-path-algorithm}

- A*{#toc-a}

- Bellman-Ford Shortest Path Algorithm{#toc-bellman-ford-shortest-path-algorithm}

- Floyd-Warshall All-Pairs Shortest Path Algorithm{#toc-floyd-warshall-all-pairs-shortest-path-algorithm}

- Graph Analysis

Methods{#toc-graph-analysis-methods}

- Topological Sort{#toc-topological-sort}

- Tarjan’s Strongly Connected Component Algorithm{#toc-tarjans-strongly-connected-component-algorithm}

- Kruskal’s Minimum Spanning Tree Algorithm{#toc-kruskals-minimum-spanning-tree-algorithm}

- Prim’s Minimum Spanning Tree Algorithm{#toc-prims-minimum-spanning-tree-algorithm}

- Recursive

Problems{#toc-recursive-problems}

- The Decision Tree (DAG) Model{#toc-the-decision-tree-dag-model}

- Backtracking{#toc-backtracking}

- Greedy Algorithms{#toc-greedy-algorithms}

- Dynamic Programming & Memoization{#toc-dynamic-programming-memoization}

- Unordered Choices Without Repetitions in 1D{#toc-unordered-choices-without-repetitions-in-1d}

- Unordered Choices With Repetitions in 1D{#toc-unordered-choices-with-repetitions-in-1d}

- Ordered Choices in 1D Sequences{#toc-ordered-choices-in-1d-sequences}

- Ordered Choices in 2D Sequences (Subsequences){#toc-ordered-choices-in-2d-sequences-subsequences}

- Ordered Choices in 2D+ Sequences (DFS){#toc-ordered-choices-in-2d-sequences-dfs}

- Unordered Choices in 2D Sequences (Binary Search){#toc-unordered-choices-in-2d-sequences-binary-search}

- Turn-Based Games (Double-ended queue){#toc-turn-based-games-double-ended-queue}

- Numerical Methods{#toc-numerical-methods}

- Bit Manipulation and Set Operations{#toc-bit-manipulation-and-set-operations}

- Combinatorial

Methods{#toc-combinatorial-methods}

- Permutations{#toc-permutations}

- Combinations{#toc-combinations}

- Cartesian Product{#toc-cartesian-product}

- n-th Partial Sum{#toc-n-th-partial-sum}

- Derangement{#toc-derangement}

- Fibonacci Numbers{#toc-fibonacci-numbers}

- Lattice Paths{#toc-lattice-paths}

- Catalan Numbers{#toc-catalan-numbers}

- Stars and Bars{#toc-stars-and-bars}

- Geometric

Problems{#toc-geometric-problems}

- K Nearest Neighbors{#toc-k-nearest-neighbors}

- Convex Hulls{#toc-convex-hulls}

- Count Rectangles{#toc-count-rectangles}

- Area of Histograms{#toc-area-of-histograms}

- Newton’s Method{#toc-newtons-method}

- Sequence Search and

Sort{#toc-sequence-search-and-sort}

- Appendix{#toc-appendix}

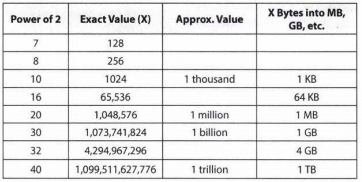

- Powers of 2 Table{#toc-powers-of-2-table}

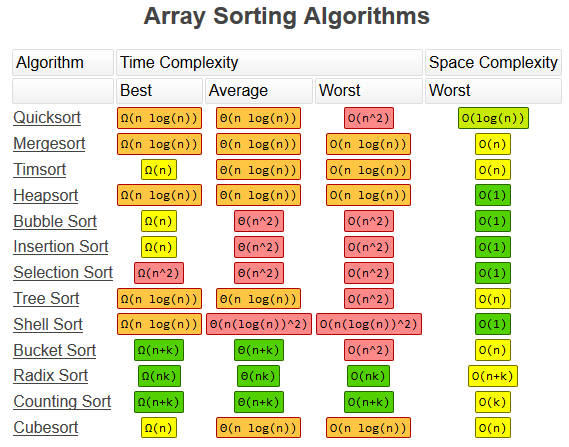

- Array Sorting Algorithms Table{#toc-array-sorting-algorithms-table}

- Single-Source Shortest Path Table{#toc-single-source-shortest-path-table}

- Algorithm Optimization Checklist{#toc-algorithm-optimization-checklist}

- Whiteboard Interview Checklist{#toc-whiteboard-interview-checklist}

Pre-requisite Concepts

Runtime Analysis

Asymptotic Analysis (Big O)

We can measure the growth rate of the time or space complexity of an algorithm using an upper bound ($\mathcal{O}(f)$), lower bound ($\Omega (f)$) or a tight bound ($\Theta (f)$) on the best, worst or average case run time. When analysing an algorithm we typically use an upper bound on the worst case. \(\mathcal{O}(1) \leq \mathcal{O}(\log n) \leq \mathcal{O}(n) \leq \mathcal{O}(n \log n) \leq \mathcal{O}(n^2) \leq \mathcal{O}(2^n) \leq \mathcal{O}(n!)\)

::: multicols 2

-

$\mathcal{O}(1)$ - constant time

-

$\mathcal{O}(\log(n))$ - logarithmic time

-

$\mathcal{O}((\log(n))^c)$ - polylogarithmic time

-

$\mathcal{O}(n)$ - linear time

-

$\mathcal{O}(n^2)$ - quadratic time

-

$\mathcal{O}(n^c)$ - polynomial time

-

$\mathcal{O}(c^n)$ - exponential time

-

$\mathcal{O}(n!)$ - factorial time :::

Amortized Analysis

If the cost of an action has high variance, i.e. its computation is often inexpensive but is occasionally expensive, we can capture its expected behaviour using an amortized time value. If we let $T(n)$ represent the amount of work the algorithm does on an input of size $n$, An operation has amortized cost $T(n)$ if $k$ operations cost $\leq k \cdot T(n)$. $T(n)$ being amortized roughly means $T(n)$ is averaged over all possible operations.

For example, a dynamic array will copy over elements to an array of double its size whenever an insert is called on an already full instance, otherwise it will simply insert the new element. For $n$ insertions, this happens on every $2, 4, 8, …, n$ element. \(T(n) = \mathcal{O}( n + \frac{n}{2} + \frac{n}{4} + \cdots + 1) = \mathcal{O}(2n)\) Therefore, an insertion takes $\mathcal{O}(n)$ time in the worst case but the amortized time for each insertion is $\mathcal{O}(1)$.

A data structure realizing an amortized complexity of $\mathcal{O}(f(n))$ is less performant than one with a worst-case complexity is $\mathcal{O}(f(n))$, since a very expensive operation might still occur, but it is better than an algorithm with an average-case complexity $\mathcal{O}(f(n))$, since the amortized bound will achieve this average on any input.

Logarithmic Runtime

When encountering an algorithm in which the number of elements in the problem space is halved on each step, i.e. in a divide and conquer solution like binary search, the algorithm will likely have a $\mathcal{O}(\log n)$ or $\mathcal{O}(n \log n)$ run-time. We can think of $\mathcal{O}(n \log n)$ as doing $\log n$ work $n$ times.

Again, if we let $T(n)$ represent the amount of work the algorithm does

on an input of size $n$, $$\begin{aligned}

T(n) &= T(n/2) + \Theta(1)

&= T(n/4)+ \Theta(1) + \Theta(1) \

&= \Theta(1) + \cdots + \Theta(1)

&= \Theta(\log n )

\end{aligned}$$

Computational Complexity Theory

Complexity Classes

\[P \subseteq NP \subseteq EXP \subseteq R\]-

$P$: The set of problems that can be solved in polynomial time.

-

$NP$: The set of decision problems that can be solved in non-deterministic polynomial time via a “lucky” algorithm.

-

$EXP$: The set of problems that can be solved in exponential time.

-

$R$: The set of problems that can be solved in finite time.

NP Problems

Nondeterminsitic Polynomial (NP) problems follow a nondeterministic model in which an algorithm makes guesses and produce a binary output of YES or NO. These are the simplest interesting class of problems and are known as decision problems. A “lucky” algorithm can make guesses which are always correct without having to attempt all options. In other words, $NP$ is the set of decision problems with solutions that can be verified in polynomial time.

P vs. NP asks whether generating proofs of solutions is harder than checking, i.e whether every problem whose solution can be quickly verified can also be solved quickly. NP-hard problems are those at least as hard as all NP problems. NP-hard problems need not be in NP; that is, they may not have solutions verifiable in polynomial time. NP-complete problems are a set of problems to each of which any other NP-problem can be reduced in polynomial time and whose solution may still be verified in polynomial time. In fact, NP-complete = NP $\cap$ NP-hard.

Reductions

A reduction is an algorithm for transforming one problem into another problem for which a solution or analysis already exists (instead of solving it from scratch). A sufficient reduction from one problem to another can be used to show that the second problem is at least as difficult as the first.

NP-complete problems are all interreducible using polynomial-time reductions (same difficulty). This implies that we can use reductions to prove NP-hardness. A one-call reduction is a polynomial time algorithm that constructs an instance of $X$ from an instance $Y$ so that their optimal values are equal, i.e. $X$ problem $\implies$ $Y$ problem $\implies$ $Y$ solution $\implies$ $X$ solution. Multicall reductions instead solve $X$ using free calls to $Y$ – in this sense, every algorithm reduces the problem and model of computation.

Algorithm Design

In-place algorithm

An in-place algorithm is an algorithm that transforms input using no auxiliary data structure, though a small amount of extra storage space is allowed for a constant number of auxiliary variables. The input is usually overwritten by the output (mutated) as the algorithm executes. An in-place algorithm updates input sequence only through replacement or swapping of elements.

Time–Space– tradeoff

A time-space or time–memory trade-off is a case where an algorithm trades increased space usage with decreased time complexity. Here, space refers to the data storage consumed in performing a given task (RAM, HDD, etc), and time refers to the time consumed in performing a given task (computation time or response time).

Heuristics

A heuristic is a technique designed for solving a problem more quickly when classic methods are too slow, or for finding an approximate solution when classic methods fail to find any exact solution. This is achieved by trading optimality, completeness, accuracy, or precision for speed. In a way, it can be considered a shortcut.

Hash Functions

A hash function is any function that can be used to map data of arbitrary size to fixed-size values. The values returned by a hash function are called hash values, hash codes, digests, or simply hashes. A good hash function satisfies two basic properties: it should be very fast to compute and it should minimize duplication of output values (collisions). For many use cases, it is useful for every hash value in the output range to be generated with roughly the same probability. Two of the most common hash algorithms are the MD5 (Message-Digest algorithm 5) and the SHA-1 (Secure Hash Algorithm).

Stack vs. Heap Memory Allocation

The stack is the memory set aside as scratch space for a thread of execution. When a function is called, a block of fixed size is reserved on the top of the stack for local variables and some bookkeeping data. When that function returns, the block becomes freed for future use. The stack is always reserved in a LIFO (last in first out) order; the most recently reserved block is always the next block to be freed. This makes it really simple and fast to keep track of and access the stack; freeing a block from the stack is nothing more than adjusting one pointer. Also, each byte in the stack tends to be reused very frequently which means it tends to be mapped to the processor’s cache, making it very fast.

The heap is memory set aside for dynamic allocation by the OS through the language runtime. Unlike the stack, there’s no enforced pattern to the allocation and deallocation of blocks from the heap. The size of the heap is set on application startup, but can grow as space is needed. This makes it much more complex to keep track of and access which parts of the heap are allocated or free at any given time. Another performance hit for the heap is that the heap, being mostly a global resource, typically has to be multi-threading safe.

Data Structures and ADTs

An abstract data type (ADT) is a theoretical model of an entity and the set of operations that can be performed on that entity

A data structure is a value in a program which can be used to store and operate on data, i.e. it is a programmed implementation of an ADT.

Contiguously-allocated structures are composed of single slabs of memory, and include arrays, matrices, heaps, and hash tables.

Linked data structures are composed of distinct chunks of memory bound together by pointers, and include lists, trees, and graph adjacency lists. Recall, a pointer is a reference to a memory address which stores some data.

Lists and Arrays

List

A list is an abstract data type that represents a countable number of ordered values, where the same value may occur more than once. Lists are a basic example of containers, as they contain other values. Their operations include the following,

-

isEmpty(L): test whether or not the list is empty

-

prepend(L, item): prepend an entity to the list

-

append(L, item): append an entity to the list

-

get(L, i): access the element at a given index.

-

head(L): determine the first component of the list

-

tail(L): refer to the list consisting of all the components of a list except for its first (head).

A self-organizing list is a list that reorders its elements based on some self-organizing heuristic to improve average access time. The aim of a self-organizing list is to improve efficiency of linear search by moving more frequently accessed items towards the head of the list. A self-organizing list achieves near constant time for element access in the best case and uses a reorganizing algorithm to adapt to various query distributions at runtime.

Arrays

An array is a data structure implementing a list ADT, consisting of a collection of elements (values or variables), each identified by at least one array index or key.

A bit array (a.k.a bit map or bit mask) is a data structure which uses an array of 0’s and 1’s to compactly store information. An index $j$ with a value of 1 indicates the presence of an integer corresponding to $j \in \mathbb Z$, operations performed on these binary arrays are analogs to set operations. We can extend the bit array to store arbitrary integers. When given a constraint on possible values that need to be stored and analyzed, we can initialize an array with a size of the range of max possible values and record frequencies directly in their corresponding index, eliminating the need to re-order an unsorted array or maintain a sorted order on every insertion.

A dynamic array is a data structure that allocates all elements contiguously in memory and keeps a count of the current number of elements. If the space reserved for the dynamic array is exceeded, it is reallocated and (possibly) copied, which is an expensive operation. Though its amortized insertion cost is equal to a static array, $\Theta(1)$. Python’s “List” data structure is a dynamic array which uses table doubling to support its constant amortized operations. It is possible to implement real-time table doubling to support constant time worst-case insertions by incrementally building up a larger array on initial insertions which we can then quickly switch to when the original array is full.

Time Complexity of List operations

Operation Average Case Amortized Worst Case

—————— ———————— ————————–

Copy $\mathcal{O}(n)$ $\mathcal{O}(n)$

Append $\mathcal{O}(1)$ $\mathcal{O}(1)$

Pop last $\mathcal{O}(1)$ $\mathcal{O}(1)$

Pop intermediate $\mathcal{O}(k)$ $\mathcal{O}(k)$

Insert $\mathcal{O}(n)$ $\mathcal{O}(n)$

Get Item $\mathcal{O}(1)$ $\mathcal{O}(1)$

Set Item $\mathcal{O}(1)$ $\mathcal{O}(1)$

Delete Item $\mathcal{O}(n)$ $\mathcal{O}(n)$

Iteration $\mathcal{O}(n)$ $\mathcal{O}(n)$

Get Slice $\mathcal{O}(k)$ $\mathcal{O}(k)$

Del Slice $\mathcal{O}(n)$ $\mathcal{O}(n)$

Set Slice $\mathcal{O}(k+n)$ $\mathcal{O}(k+n)$

Extend $\mathcal{O}(k)$ $\mathcal{O}(k)$

Sort $\mathcal{O}(n\log n)$ $\mathcal{O}(n\log n)$

Multiply $\mathcal{O}(nk)$ $\mathcal{O}(nk)$

x in s $\mathcal{O}(n)$

min(s), max(s) $\mathcal{O}(n)$

Get Length $\mathcal{O}(1)$ $\mathcal{O}(1)$

Linked Lists

A linked list is a data structure that represents a sequence of nodes. In a singly linked list each node maintains a pointer to the next node in the linked list. A doubly linked list gives each node pointers to both the next node and the previous node. Unlike an array, a linked list does not provide constant time access to a particular “index” within the list, i.e. to access the $K$th index you will need to iterate through $K$ elements. The benefit of a linked list is that inserting and removing items from the beginning of the list can be done in constant time. For specific applications, this can be useful. Linked structures can have poor cache performance compared with arrays. Maintaining a sorted linked list is costly and not usually worthwhile since we cannot perform binary searches.

A doubly linked list maintains a reference to the node previous to it, allowing for bidirectional traversal at the expense of additional book-keeping on insertions and deletions. Doubly linked lists are commonly found in least-recently used (LRU) and least frequently used (LFU) caches where they maintain an ordering of keys for the caching policy. Although double-ended queues support removing and adding items to the head and tail, removing items from middle of a queue to the head or tail is less performant than in a doubly linked list.

Python Implementation

``` {.python language=”Python”} class ListNode: def init(self, val=0, next=None): self.val = val self.next = next

### Skip Lists

As an alternative to balanced trees examined later, a hierarchy of

sorted linked lists is maintained, where a random variable is associated

to each element to decide whether it gets copied into the next highest

list. This implies roughly $\log n$ lists, each roughly half as large as

the one above it. A search starts in the smallest list. The search key

lies in an interval between two elements, which is then explored in the

next larger list. Each searched interval contains an expected constant

number of elements per list, for a total expected $\mathcal{O}(\log n)$

time for lookups, insertions, and removals.

This structure is well-suited for solving problems like tracking a

running median which requires sort order to be maintained as new items

are added and old items are deleted and which requires fast access to

the n-th item to find the median or quarterlies. The primary benefits of

skip lists for other purposes achievable by B+trees are ease of analysis

and implementation relative to balanced trees.

## Stacks and Queues

### Stacks

A stack is an ADT container that uses last-in first-out (LIFO) ordering,

i.e. the most recent item added to the stack is the first item to be

removed. It supports the following operations:

- **pop()**: Remove the top item from the stack.

- **push(item)**: Add an item to the top of the stack.

- **peek()**: Return the top of the stack.

- **isEmpty()**: Return true if and only if the stack is empty.

Unlike an array, a stack does not offer constant-time access to the

$i$th item. However, it does allow constant time adds and LIFO removals

since it doesn't require shifting elements around. One case where stacks

are often useful is in certain recursive algorithms where we need to

push temporary data onto a stack as we recurse and then remove them as

we backtrack (for example, because the recursive check failed). A stack

offers an intuitive way to do this. A stack can also be used to

implement a recursive algorithm iteratively which is what's otherwise

done in a function's call stack. It is occasionally useful to maintain

two stacks in order to have access to a secondary state, like the

minimum/maximum, in constant time.

### Queues

A queue is an ADT container that implements FIFO (first-in first-out)

ordering, i.e. items are removed in the same order that they are added.

It supports the following operations:

- **push(item)**: Add an item to the end of the queue.

- **popLeft()**: Remove and return the first item in the queue.

- **peek()**: Return the top of the queue.

- **isEmpty()**: Return true if the queue is empty.

Some common places where queues are often used is in breadth-first

search or in implementing a cache. In breadth-first search we may use a

queue to store a list of the nodes that we need to process. Each time we

process a node, we add its adjacent nodes to the back of the queue. This

allows us to process nodes in the order in which they are viewed.

A queue can be implemented with a linked list and moreover, they are

essentially the same thing as long as items are added and removed from

opposite sides.

The deque module (short for double-ended queue), provides a data

structure which pops from or pushes to either side of the queue with the

same $\mathcal{O}(1)$ performance.

*Python Implementation*

``` {.python language="Python"}

from collections import deque

q = deque()

for item in data:

q.append(item)

while len(q):

next_item = q.popleft() # array.pop(0)

print(next_item)

Time Complexity of collections.deque operations

Operation Average Case Amortized Worst Case ————— —————— ————————– Copy $\mathcal{O}(n)$ $\mathcal{O}(n)$ append $\mathcal{O}(1)$ $O(1)$ appendleft $\mathcal{O}(1)$ $\mathcal{O}(1)$ pop $\mathcal{O}(1)$ $\mathcal{O}(1)$ popleft $\mathcal{O}(1)$ $\mathcal{O}(1)$ extend $\mathcal{O}(k)$ $\mathcal{O}(k)$ extendleft $\mathcal{O}(k)$ $\mathcal{O}(k)$ rotate $\mathcal{O}(k)$ $\mathcal{O}(k)$ remove $\mathcal{O}(n)$ $\mathcal{O}(n)$

Priority Queues

A priority queue is an ADT container that retrieves items not by the insertion order (as in a stack or queue), nor by a key match (as in a dictionary), but instead retrieves items with the highest priority value. Priority queues provide more flexibility than simple sorting because they allow new elements to enter a system at arbitrary intervals. It is much more cost-effective to insert a new job into a priority queue than to re-sort everything on each arrival. Max-priority queues order values in descending order with higher priority first and are typically implemented with a max-heap. Min-priority queues sort values in ascending order with lower priority values first and are typically implemented with a min-heap.

The basic priority queue supports three primary operations:

-

insert(Q, x): Given an item x with key k, insert it into the priority queue Q.

-

findMinimum(Q) or findMaximum(Q): Return a pointer to the item whose key value is smaller (larger) than any other key in the priority queue Q.

-

deleteMinimum(Q) or deleteMaximum(Q): Remove the item from the priority queue Q whose key is minimum (maximum).

There are several choices in which underlying data structures can be used for a basic priority queue implementation:

-

Binary heaps are the right answer when the upper bound on the number of items in your priority queue is known, since you must specify array size at creation time. Though this constraint can be mitigated by using dynamic arrays

-

Binary search trees make effective priority queues, since the smallest element is always the leftmost leaf, while the largest element is always the rightmost leaf. The min (max) is found by simply tracing down left (right) pointers until the next pointer is nil. Binary tree heaps prove most appropriate when you need other dictionary operations, or if you have an unbounded key range and do not know the maximum priority queue size in advance.

-

Sorted arrays are very efficient in both identifying the smallest element and deleting it by decrementing the top index. However, maintaining the total order makes inserting new elements slow. Sorted arrays are only suitable when there will be few insertions into the priority queue.

-

Bounded height priority queue work as their name suggests.

-

Fibonacci and pairing heaps can be used to implement complicated priority queues that are designed to speed up decrease-key operations, where the priority of an item already in the priority queue is reduced. This arises, for example, in shortest path computations when we discover a shorter route to a vertex $v$ than previously established.

Note: See binary heap section for more details about heapq. The heapq module creates min-heaps by default, max priority queues can be created by inverting priority values before insertion. The Queue.PriorityQueue module is a partial wrapper around the heapq module which also implements min-priority queues by default.

Python Implementation

``` {.python language=”Python”}

The queue module for a min-priority queue

from queue import PriorityQueue

pq = PriorityQueue() for item in data: pq.put((item.priority, item))

while not pq.empty(): next_item = pq.get() print(next_item)

The heapq module for a min-priority queue

import heapq pq = [] for idx, item in enumerate(data): heapq.heappush(pq, (item.priority, idx, item))

while pq: next_item = heapq.heappop(pq) print(next_item)

### Indexed Priority Queues

An Indexed Priority Queue gives us the ability to change the priority of

an element without having to go through all the elements. It can be

thought of as a combination of a hash table, used for quick lookups of

values, and a priority queue, to maintain a heap ordering.

### Monotonic Stacks and Queues

This structure maintains an ordering so that its elements are either

strictly increasing or strictly decreasing. It differs from a heap in

that instead of re-ordering elements as they're processed, it will

discard previous numbers that do not follow the monotonic condition

before appending a new number. It is often used to reduce a quadratic

time algorithm to a linear one by maintaining a kind of short-term

memory of the most recently processed larger or smaller value.

For example, a monotonic increasing stack can be used to find the index

distance of the next smaller number given an unordered list of numbers.

Instead of searching for the next smallest number for every number with

a nested for loop, resulting in an $\mathcal{O}(n^2)$ runtime, we only

need to check the top of the stack of indicies of previously visited

numbers, giving us a $\mathcal{O}(n)$ runtime. In an monotonic

increasing stack, we append values and pop from the right.

A geometric variant of the question asks about the area that exists in

the spaces bounded above from a given elevation map or histogram. We can

use increasing stacks but need to keep track of lower bounds and may

need to record a cumulative or max value across the entire sequence.

In some application we might also need to remove elements from the front

and back, thus a double-ended queue (deque from collections module)

should be used. In an increasing queue, we find the first element

smaller than the current, either on the left (from pushing in) or on the

right (from popping out). In a decreasing queue we find the first

element larger than current, either in the left (from pushing in) or in

the right (from popping out). A monotonic queue can also be useful for

implementing a variant of the sliding window. See sliding window section

for an example.

*Python Implementation*

``` {.python language="Python"}

def monotonic_stack(A):

smaller_to_right = [-1] * len(A)

stack = []

for i, v in enumerate(A):

while stack and A[stack[-1]] > v: # use < for larger_to_right

cur = stack.pop()

smaller_to_right[cur] = i - cur

stack.append(i)

return smaller_to_right

def trap_water(height):

stack, water = [], 0

for i, v in enumerate(height):

while stack and v >= stack[-1][0]:

right_border, _ = stack.pop()

# we need a left and right border to contain water

if stack:

left_border, j = stack[-1]

# compute the cumulative water

water += min(left_border-right_border, v-right_border)*(i-j-1)

stack.append((water, i))

return water

import collections

def monotonic_deque(A):

dq = collections.deque()

smaller_to_left, smaller_to_right = [-1] * len(A), [-1] * len(A)

for i, v in enumerate(A):

while dq and A[dq[-1]] >= v:

smaller_to_right[dq.pop()] = v

if dq:

smaller_to_left[i] = A[dq[-1]]

dq.append(i)

return smaller_to_left, smaller_to_right

Hash Tables

A hash table is an abstract data type that maps keys to values for highly efficient lookup. There are a number of ways of implementing this, but a simple and common implementation is known as separate chaining. In this implementation, we use an array of linked lists and a hash code function. To insert a key (which might be a string or essentially any other data type) and value, we do the following:

-

First, compute the key’s hash code, which will usually be an int or long. Note that two different keys could have the same hash code, as there may be an infinite number of keys and a finite number of hash codes.

-

Then, map the hash code to an index in the array. This could be done with something like $hash(key)\mod array_length$.

-

At this index, there is a linked list of keys and values. Store the key and value in this index. We must use a linked list because of collisions: you could have two different keys with the same hash code, or two different hash codes that map to the same index.

To retrieve the value pair by its key, you repeat this process. Compute the hash code from the key, and then compute the index from the hash code. Then, search through the linked list for the value with this key. If the number of collisions is very high, the worst case runtime is $\mathcal{O}(n)$, where $n$ is the number of keys. However, we generally assume a good implementation that keeps collisions to a minimum, in which case the lookup time is $\mathcal{O}(1)$. Alternatively, we can implement the hash table with a balanced binary search tree. This gives us an $\mathcal{O}(\log n)$ lookup time. The advantage of this is potentially using less space, since we no longer allocate a large array. We can also iterate through the keys in order, which can be useful sometimes.

The other strategy used to resolve collisions is to not only require each array element to contain only one key, but to also allow keys to be mapped to alternate indices when their original spot is already occupied. This is known as open addressing. In this type of hashing, we have a parameterized hash function $h$ that takes two arguments, a key and a positive integer. Searching or probing for an item requires examining not just one spot, but many spots until either we find the key, or reach a None value. After we delete an item, we replace it with a special value Deleted, rather than simply None. This way, the Search algorithm will not halt when it reaches an index that belonged to a deleted key.

The simplest implementation of open addressing is linear probing: start at a given hash value and then keep adding some fixed offset to the index until an empty spot is found. The main problem with linear probing is that the hash values in the middle of a cluster will follow the exact same search pattern as a hash value at the beginning of the cluster. As such, more and more keys are absorbed into this long search pattern as clusters grow. We can solve this problem using quadratic probing, which causes the offset between consecutive indices in the probe sequence to increase as the probe sequence is visited. Double hashing resolves the problem of the clustering that occurs when many items have the same initial hash value and they still follow the exact same probe sequence. It does this by using a hash function for both the initial value and its offset.

A useful hash function for strings is, \(H(S,j) = \sum_{i=0}^{m-1} \alpha^{m-(i+1)} \cdot char(s_{i+j}) \mod m\) where $\alpha$ is the size of the alphabet and $char(x)$ is the ASCII character code. This hash function has the useful property allowing hashes of successive m-character windows of a string to be computed in constant time instead of $\mathcal{O}(m)$. \(H(S, j+1) = (H(S,j) - \alpha^{m-1}char(s_j))\alpha + s_{j+m}\)

Dictionaries and Hash Tables

The dictionary data structure (implementing the hash table or hash map ADT) permits access to data items based on a key derived from its content. You may insert an item into a dictionary to retrieve it in constant time later on. To resolve hash collisions, Python $\mathtt{dicts}$ use open addressing with random probing where the next slot is picked in a pseudo random order. The entry is then added to the first empty slot.

The primary operations a hash table supports are:

-

search(D, k) – Given a search key $k$, return a pointer to the element in dictionary $D$ whose key value is $k$, if one exists.

-

insert(D, x) – Given a data item $x$, add it to the set in the dictionary $D$.

-

delete(D, x) – Given a pointer to a given data item $x$ in the dictionary $D$, remove it from $D$.

-

max(D) or min(D) – Retrieve the item with the largest (or smallest) key from $D$. This enables the dictionary to serve as a priority queue.

-

predecessor(D, k) or successor(D, k) – Retrieve the item from $D$ whose key is immediately before (or after) $k$ in sorted order. These enable us to iterate through the elements of the data structure.

Time Complexity of Dictionary Operations

Operation Average Case Amortized Worst Case ————— —————— ————————– k in d $\mathcal{O}(1)$ $\mathcal{O}(n)$ Copy $\mathcal{O}(n)$ $\mathcal{O}(n)$ Get Item $\mathcal{O}(1)$ $\mathcal{O}(n)$ Set Item $\mathcal{O}(1)$ $\mathcal{O}(n)$ Delete Item $\mathcal{O}(1)$ $\mathcal{O}(n)$ Iteration $\mathcal{O}(n)$ $\mathcal{O}(n)$

Sets

In mathematical terms, a set is an unordered collection of unique objects drawn from a fixed universal set. A hash set implements the set ADT using a hash table. The core operations that sets support are:

-

Test whether $u_i \in S$.

-

Compute the union or intersection of sets $S_i$ and $S_j$.

-

Insert or delete members of $S$.

Sets are commonly implemented with the following data structures:

-

Containers or dictionaries – A subset can also be represented using a linked list, array, or dictionary containing exactly the elements in the subset.

-

Bit vectors – An n-bit vector or array can represent any subset $S$ on a universal set $U$ containing $n$ items. Bit $i$ will be 1 if $i \in S$, and $0$ if not.

-

Bloom filters – We can emulate a bit vector in the absence of a fixed universal set by hashing each subset element to an integer from $0$ to $n$ and setting the corresponding bit.

Sorted order turns the problem of finding the union or intersection of two subsets into a linear-time operation, just sweep from left to right and see what you are missing. It makes element searching possible in sublinear time. Though, it’s important to remember that order doesn’t matter and requirements for accessing elements of the set in any canonical order will not be respected.

If each subset contains exactly two elements, they can be thought of as edges in a graph whose vertices represent the universal set. A system of subsets with no restrictions on the cardinality of its members is called a hypergraph.

Time Complexity of Set Operations

Operation Average Case Amortized Worst Case

—————————– ———————————————– ——————————–

x in s $\mathcal{O}(1)$ $\mathcal{O}(n)$

Union $\mathcal{O}(len(s)+len(t))$

Intersection $\mathcal{O}(min(len(s), len(t))$ $\mathcal{O}(len(s) * len(t))$

Multiple intersection $\mathcal{O}(n-1)*\mathcal{O}(max(len(s_i)))$

Difference $\mathcal{O}(len(s))$

Difference Update $\mathcal{O}(len(t))$

Symmetric Difference $\mathcal{O}(len(s))$ $\mathcal{O}(len(s) * len(t))$

Symmetric Difference Update $\mathcal{O}(len(t))$ $\mathcal{O}(len(t) * len(s))$

Trees

A tree is an ADT composed of nodes such that there is a root node with zero or more child nodes where each child node can be recursively defined as a root node of a sub-tree with zero or more children. Since there are no edges between sibling nodes, a tree cannot contain cycles. Furthermore, nodes can be given a particular order, can have any data type as values, and they may or may not have links back to their parent nodes.

Binary Trees

A binary tree is a tree in which each node has up to two children.

A binary search tree is a binary tree in which every node $n$ follows a specific ordering property: all left descendants $\leq n <$ all right descendants. An inorder traversal (Left-Node-Right) of a binary search tree will always result in a monotonically increasingly ordered sequence.

A complete binary tree is a binary tree in which every level of the tree is filled, except for perhaps the last level and all of the nodes in the bottom level are as far to the left as possible. A complete tree with $n$ nodes has $\lceil \log n \rceil$ height. There is no ambiguity about where the “empty” spots in a complete tree are so we do not need to use up space to store references between nodes, as we do in a standard binary tree implementation. This means that we can store its nodes inside an zero-indexed array. For a node corresponding to index $i$, its left child is stored at index $2i + 1$, and its right child is stored at index $2i + 2$. Going backwards, we can also deduce that the parent of the node at index $i$ (when $i > 0$) is stored at index $\lfloor (i-1)/2 \rfloor$.

A full binary tree is a binary tree in which every node has either zero or two children. A perfect binary tree is one that is both full and complete. A full binary tree with $n$ leaves has $n - 1$ internal nodes. Then the number of unique rooted full binary tree with $n + 1$ leaf nodes, equivalently $n$ internal node, can be counted using the $nth$ Catalan number, $C_n$. View section on Catalan numbers for more details.

Python Implementation

``` {.python language=”Python”}

n-ary tree using default dictionary

from collections import defaultdict tree = lambda: defaultdict(tree)

Object-oriented binary tree

class TreeNode: def init(self, val=0, left=None, right=None): self.val = val self.left = left self.right = right

### Binary Heaps

The **heap property** states that the key stored in each node is more

extreme (greater or less than) or equal to the keys in the node's

children. A **min-heap** is a complete binary tree (filled other than

the rightmost elements on the last level) where each node is smaller

than its children. The root, therefore, is the minimum element in the

tree. The converse ordering holds for a **max-heap**. We have two main

operations on a heap:

- **insert(x)**: When we insert into a min-heap, we always start by

inserting the element at the bottom. We insert at the rightmost spot

so as to maintain the complete tree property. Then, we maintain the

heap property by swapping the new element with its parent until we

find an appropriate spot for the element. We essentially bubble up

the minimum element. This takes $\mathcal{O}(\log n)$ time, where

$n$ is the number of nodes in the heap.

- **findMin()** or **findMax()**: Finding the minimum element of a

min-heap is inexpensive since it will always be at the top. The

challenging part is how to remove it while maintaining the heap

property. First, we remove the minimum element and swap it with the

last element in the heap (the bottommost, rightmost element). Then,

we bubble down this element, swapping it with one of its children

until the heap property is restored. This algorithm will also take

$\mathcal{O}( \log n)$ time.

A heap will be better at findMin/findMax ($\mathcal{O}(1)$), while a BST

is performant at all finds ($\mathcal{O}(\log n)$). A heap is especially

good at basic ordering and keeping track of max and mins.

Note, heapq creates a min-heap by default. To create a max-heap, you

will need to invert values before storing and after retrieving them.

Alternatively, you can define a class to wrap the module and override

and invert the comparison method. The $\mathtt{heapreplace(a, x)}$

method returns the smallest value originally in the heap regardless of

the value of x. Alternatively, $\mathtt{heappushpop(a, x)}$ pushes x

onto the heap before popping the smallest value.

*Python Implementation*

``` {.python language="Python"}

# Using heapq module

import heapq

items = [1, 6, 4, 2, 5, 7, 3, 9, 8, 10]

## Min-heap

min_heap = []

for item in items:

heapq.heappush(min_heap, item)

in_place = items.copy()

heapq.heapify(in_place)

heapq.nsmallest(3, in_place)

while in_place:

min_item = heapq.heappop(in_place)

print(min_item)

## Max-heap

max_heap = []

for item in items:

heapq.heappush(max_heap, -item)

while max_heap:

max_item = -heapq.heappop(max_heap)

print(max_item)

in_place = items.copy()

heapq._heapify_max(in_place)

heapq.nlargest(3, in_place)

while in_place:

max_item = heapq._heappop_max(in_place)

print(max_item)

# Max heap full implementation

class MaxHeap:

def __init__(self, items=[]):

self.heap = [0]

for i in items:

self.heap.append(i)

self._floatUp(len(self.heap) - 1)

def push(self, data):

self.heap.append(data)

self._floatUp(len(self.heap) - 1)

def peek(self):

if self.heap[1]:

return self.heap[1]

else:

return False

def pop(self):

if len(self.heap) > 2:

self._swap(1, len(self.heap) - 1)

maxVal = self.heap.pop()

self._bubbleDown(1)

elif len(self.heap) == 2:

maxVal = self.heap.pop()

else:

maxVal = False

return maxVal

def _swap(self, i, j):

self.heap[i], self.heap[j] = self.heap[j], self.heap[i]

def _floatUp(self, index):

parent = index // 2

if index <= 1:

return

elif self.heap[index] > self.heap[parent]:

self._swap(index, parent)

self._floatUp(parent)

def _bubbleDown(self, index):

left = index * 2

right = index * 2 + 1

largest = index

if len(self.heap) > left and self.heap[largest] < self.heap[left]:

largest = left

if len(self.heap) > right and self.heap[largest] < self.heap[right]:

largest = right

if largest != index:

self._swap(index, largest)

self._bubbleDown(largest)

Time Complexity of heapq operations

Operation Average Case Amortized Worst Case ————— ———————– ————————– heapify $\mathcal{O}(n)$ $\mathcal{O}(n)$ heappush $\mathcal{O}(1)$ $O(\log n)$ heappop $\mathcal{O}(\log n)$ $O(\log n)$ peek $\mathcal{O}(1)$ $\mathcal{O}(1)$ heappushpop TBD TBD heapreplace TBD TBD nlargest TBD TBD nsmallest TBD TBD

Tries (Prefix Trees)

A trie is a variant of an n-ary tree in which alphanumeric characters are stored at each node. Each path down the tree may represent a word. The * nodes (sometimes called “null nodes") are often used to indicate complete words. The actual implementation of these * nodes might be a special type of child (i.e. a TerminatingTrieNode class which inherits from TrieNode) or we can use a boolean flag. A node in a trie could have anywhere from 1 through size of alphabet + 1 children (or, 0 through size of alphabet if a boolean flag is used instead of a * node).

The complexity of creating a trie is $\mathcal{O}(|W| L)$, where $W$ is the number of words, and $L$ is an average length of the word: you need to perform $L$ lookups on average for each of the $W$ words in the set. Same goes for looking up words later: you perform $L$ steps for each of the $W$ words. Very commonly, a trie is used to store the entire English language for quick prefix lookups. Many problems involving lists of valid words leverage a trie as an optimization. Note that it can often be useful to preprocess the words before inserting them into a trie, possibly by parsing or reversing.

To get autocomplete suggestions, it helps to store the cumulative word along with the end indicator. We can then search the trie up to the prefix end and perform a recursive DFS to find all word end points while appending the results to an array of suggestions. To handle misspellings, a function considers four types of edits: a deletion (remove one letter), a transposition (swap two adjacent letters), a replacement (change one letter to another) or an insertion (add a letter). The edit function returns a list of words within a max $N$ edits from the specified word that exist in a dictionary of known words. We can then find suggestions with DFS as before. For better semantics, we can use bayesian probabilities to determine the intent of the user1.

Note, most methods in the trie follow a similar traversal pattern, create a pointer to the root of the tree, iterate over characters of a given word, check if the character is not in the current pointers children and then move the pointer forward to the corresponding child.

Python Implementation

``` {.python language=”Python”}

Trie node as lambda function

import collections TrieNode = lambda: collections.defaultdict(TrieNode)

Trie node as class with end of word attribute

class TrieNode: def init(self): self.word = False self.children = {}

Trie dict with end of word indicated by special character $

class Trie: def init(self): self.root = {}

def insert(self, word):

node = self.root

for char in word:

if char not in node:

node[char] = {}

node = node[char]

node['$'] = word

def search(self, word):

node = self.root

for char in word:

if char not in node:

return False

node = node[char]

return '$' in node

def startsWith(self, prefix):

node = self.root

for char in prefix:

if char not in node:

return False

node = node[char]

return True

def suggestions(self, prefix):

def dfs(node):

results = []

if not node: return results

if '$' in node:

results.append(node['$'])

for child in node:

results.extend(dfs(node[child]))

return results

node = self.root

for char in prefix:

if char not in node:

return []

node = node[char]

return dfs(node) ```

Suffix Trees/Arrays

A special kind of trie, called a suffix tree, can be used to index all suffixes in a text in order to carry out fast full text searches. The construction of such a tree for the string $S$ takes linear time and space relative to the length of $S$. A suffix tree is basically like a search trie: there is a root node, edges going out of it leading to new nodes, and further edges going out of those, and so forth. Unlike in a search trie, the edge labels are not single characters. Instead, each edge is labeled using a pair of integers: [from, to], which are pointers into the text. In this sense, each edge carries a string label of arbitrary length, but takes only $\mathcal{O}(1)$ space (two pointers).

Some example use cases are as follows:

-

Find all occurrences of $q$ as a substring of $S$: In collapsed suffix trees, it takes $\mathcal{O}(|q| + k)$ time to find the $k$ occurrences of $q$ in $S$.

-

Locating a substring if a certain number of mistakes or edits are allowed

-

Locating matches for a regular expression pattern

-

Finding longest common substring within a set of strings in linear-time

-

Find the longest palindrome in $S$

Storing a string’s suffix tree typically requires significantly more space than storing the string itself. Observe that most of the nodes in a trie-based suffix tree occur on simple paths between branch nodes in the tree. Each of these simple paths corresponds to a substring of the original string. By storing the original string in an array and collapsing each such path into a single edge, we have all the information of the full suffix tree in only $\mathcal{O}(n)$ space. The label for each edge is described by the starting and ending array indices representing the substring.

The suffix tree for the string $S$ of length $n$ is defined as a tree such that:

-

The tree has exactly $n$ leaves numbered from $1$ to $n$.

-

Except for the root, every internal node has at least two children.

-

Each edge is labelled with a non-empty substring of $S$.

-

No two edges starting out of a node can have string-labels beginning with the same character.

-

The string obtained by concatenating all the string-labels found on the path from the root to leaf $i$ spells out suffix $S[i \cdots n]$, for $i$ from $1$ to $n$.

Suffix arrays do most of what suffix trees do, while using roughly four times less memory. They are also easier to implement. A suffix array is, in principle, just an array that contains all the $n$ suffixes of $S$ in sorted order. Thus a binary search of this array for string $q$ suffices to locate the prefix of a suffix that matches $q$, permitting an efficient substring search in $O(\log n)$ string comparisons. With the addition of an index specifying the common prefix length of all bounding suffixes, only $\log n+|q|$ character comparisons need be performed on any query, since we can identify the next character that must be tested in the binary search.

In a suffix array, a suffix is represented completely by its unique starting position (from $1$ to $n$) and read off as needed using a single reference copy of the input string. Some care must be taken to construct suffix arrays efficiently, however, since there are $O(n^2)$ characters in the strings being sorted. One solution is to first build a suffix tree, then perform an in-order traversal of it to read the strings off in sorted order. However, more recent breakthroughs have lead to space/time efficient algorithms for constructing suffix arrays directly.

Python Implementation

``` {.python language=”Python”} from itertools import zip_longest, islice

def to_int_keys(l): seen = set() ls = [] for e in l: if not e in seen: ls.append(e) seen.add(e) ls.sort() index = {v: i for i, v in enumerate(ls)} return [index[v] for v in l]

def suffix_array(s): “”” suffix array of s, TC: O(n * log(n)^2) “”” n = len(s) k = 1 line = to_int_keys(s) while max(line) < n - 1: line = to_int_keys( [a * (n + 1) + b + 1 for (a, b) in zip_longest(line, islice(line, k, None), fillvalue=-1)]) k «= 1 return line

### Merkle Trees

A **hash tree** or Merkle tree is a tree in which every leaf node is

labelled with the cryptographic hash of a data block, and every non-leaf

node is labelled with the cryptographic hash of the labels of its child

nodes. Hash trees allow efficient and secure verification of the

contents of large data structures. Hash trees are a generalization of

hash lists and hash chains.

*Python Implementation*

``` {.python language="Python"}

from hashlib import sha256

def hash(x):

S = sha256()

S.update(x)

return S.hexdigest()

def merkle(node):

if not node:

return '#'

m_left = merkle(node.left)

m_right = merkle(node.right)

node.merkle = hash(m_left + str(node.val) + m_right)

return node.merkle

# Two trees are identical if the hash of their roots are equal (except for collisions)

def isSubtree(s, t):

merkle(s)

merkle(t)

def dfs(node):

if not node:

return False

return (node.merkle == t.merkle or

dfs(node.left) or dfs(node.right))

return dfs(s)

Kd-Trees

Kd-trees and related spatial data structures hierarchically partition k-dimensional space into a small number of cells, each containing a few representatives from an input set of points. This provides a fast way to access any object by position. We traverse down the hierarchy until we find the smallest cell containing it, and then scan through the objects in this cell to identify the right one. Building the tree can be done in $O(N \log N)$, where the bottleneck is a requirement of presorting the points and finding the medians (but we only need to do this once). Search, Insert, Delete all have runtime of $\mathcal{O}(\log N)$, similar to how a normal binary tree works (with a tree balancing mechanism).

Typical algorithms construct kd-trees by partitioning point sets. Ideally, this plane equally partitions the subset of points into left/right (or up/down) subsets. Partitioning stops after $\log n$ levels, with each point in its own leaf cell. Each box-shaped region is defined by $2k$ planes, where $k$ is the number of dimensions. Useful applications are as follows:

-

Point location – To identify which cell a query point $q$ lies in, we start at the root and test which side of the partition plane contains $q$.

-

Nearest neighbor search – To find the point in $S$ closest to a query point $q$, we perform point location to find the cell $c$ containing $q$

-

Range search – Which points lie within a query box or region? Starting from the root, check whether the query region intersects (or contains) the cell defining the current node. If it does, check the children; if not, none of the leaf cells below this node can possibly be of interest.

-

Partial key search – Suppose we want to find a point p in S, but we do not have full information about p. Say we are looking for someone of age 35 and height 5’8” but of unknown weight in a 3D-tree with dimensions of age, weight, and height. Starting from the root, we can identify the correct descendant for all but the weight dimension

Kd-trees are most useful for a small to moderate number of dimensions, say from 2 up to maybe 20 dimensions, otherwise they suffer from what’s known as the curse of dimensionality. Algorithms that quickly produce a point provably close to the query point are a recent development in higher-dimensional nearest neighbor search. A sparse weighted graph structure is built from the data set, and the nearest neighbor is found by starting at a random point and walking greedily in the graph towards the query point.

Python Implementation

``` {.python language=”Python”}

Using heap (relative distance without square root)

def KNN_to_origin(points, K): def distance_to_origin(p): return p[0]2 + p[1]2 q = [] for point in points: heapq.heappush(q, (distance_to_origin(point), point)) return [ heapq.heappop(q)[1] for _ in range(K) ]

Using a KDTree

from scipy import spatial def KNN_to_origin(points, K): tree = spatial.KDTree(points) # x is the origin, k is the number of closest neighbors, p=2 refers to choosing L2 norm (euclidean distance) distance, idx = tree.query(x=[0,0], k=K, p=2) return [points[i] for i in idx] if K > 1 else [points[idx]]

### Segment Trees

A Segment Tree can be used for storing information about intervals, or

segments. It allows querying which of the stored segments contain a

given point. In principle, it's a static structure, meaning it cannot be

modified once it's built. For $n$ intervals, a segment tree uses

$\mathcal{O}(n \log n)$ storage, can be built in $\mathcal{O}(n \log n)$

time, and support searching for all the intervals that contain a query

point in $\mathcal{O}(\log n + k)$, $k$ being the number of retrieved

intervals or segments.

1. Segment tree $T$ is a binary tree.

2. Leaves in $T$ correspond to the intervals described by the endpoints

in set of intervals $I$. The ordering of intervals is maintained in

the tree, i.e. the leftmost leaf corresponds to the leftmost

interval.

3. The internal nodes of $T$ correspond to intervals that are the union

of elementary intervals.

4. Each node or leaf in $T$ stores the interval of itself and a set of

intervals in a data structure.

Similar trees that operate on intervals are described below:

- **Segment trees** stores intervals and are optimized for "which of

these intervals contains a given point\" queries.

$\mathcal{O}(n \log n)$ preprocessing time, $\mathcal{O}(k+\log n)$

query time, $\mathcal{O}(n \log n)$ space. Interval can be

added/deleted in $\mathcal{O}(\log n)$ time.

- **Interval trees** store intervals as well, but are optimized for

"which of these intervals overlap with a given interval\" queries.

They can also be used for point queries - similar to segment tree.

$\mathcal{O}(n \log n)$ preprocessing time, $\mathcal{O}(k+\log n)$

query time, $\mathcal{O}(n)$ space. Interval can be added/deleted in

$\mathcal{O}(\log n)$ time

- **Range trees** store points and are optimized for "which points

fall within a given interval\" queries.

$\mathcal{O}(n \log n)$ preprocessing time, $\mathcal{O}(k+\log n)$

query time, $\mathcal{O}(n)$ space. New points can be added/deleted

in $\mathcal{O}(\log n)$ time

- **Binary indexed trees** stores items-count per index, and are

optimized for "how many items are there between index m and n\"

queries.

$\mathcal{O}(n \log n)$ preprocessing time, $\mathcal{O}(\log n)$

query time, $\mathcal{O}(n)$ space. The items-count per index can be

increased in $\mathcal{O}(\log n)$ time

*Python Implementation*

``` {.python language="Python"}

#Segment tree node

class Node(object):

def __init__(self, start, end):

self.start = start

self.end = end

self.total = 0

self.left = None

self.right = None

class SegmentTree(object):

def __init__(self, nums):

self.root = createTree(nums, 0, len(nums)-1)

def createTree(nums, l, r):

if l > r:

return None

#leaf node

if l == r:

n = Node(l, r)

n.total = nums[l]

return n

mid = (l + r) // 2

root = Node(l, r)

#recursively build the Segment tree

root.left = createTree(nums, l, mid)

root.right = createTree(nums, mid+1, r)

#Total stores the sum of all leaves under root

#i.e. those elements lying between (start, end)

root.total = root.left.total + root.right.total

return root

Self-balancing Trees

Balanced search trees use local rotation operations to restructure search trees, moving more distant nodes closer to the root while maintaining the in-order search structure of the tree. The balance factor of a node in a binary tree is the height of its right subtree minus the height of its left subtree.

Among balanced search trees, AVL and 2/3 trees are now considered out-dated while red-black trees seem to be more popular. A particularly interesting self-organizing data structure is the splay tree, which uses rotations to move any accessed key to the root. Frequently used or recently accessed nodes thus sit near the top of the tree, allowing faster searches.

AVL Trees

An AVL tree is a self-balancing binary search tree. A node satisfies the AVL invariant if its balance factor is between -1 and 1. A binary tree is AVL-balanced if all of its nodes satisfy the AVL invariant, so we can say that an AVL tree is a binary search tree that is AVL-balanced.

To maintain the AVL condition, perform an insertion/deletion using the typical BST algorithm, then if any nodes have the balance factor invariant violated, restore the invariant. We can simply do so after the recursive Insert, Delete, ExtractMax, or ExtractMin call. So we go down the tree to search for the correct spot to insert the node, and then go back up the tree to restore the AVL invariant. In fact, these restrictions make it straightforward to define a small set of simple, constant-time procedures to restructure the tree to restore the balance factor in these cases. Recall, these procedures are called rotations.

The worst-case running time of AVL tree insertion and deletion is $\mathcal{O}(h)$, where $h$ is the height of the tree, the same as for the naive insertion and deletion algorithms. An AVL tree with $n$ nodes has height at most $1.44 \log n$. AVL tree insertion, deletion, and search have worst-case running time $\Theta(\log n)$, where $n$ is the number of nodes in the tree

Red–black Trees

A red–black tree is a kind of self-balancing binary search tree. Each node of the binary tree has an extra bit which is often interpreted as the color (red or black) of the node. These color bits are used to ensure the tree remains approximately balanced during insertions and deletions.

Balance is preserved by painting each node of the tree with one of two colors in a way that satisfies certain properties, which collectively constrain how unbalanced the tree can become in the worst case. When the tree is modified, the new tree is subsequently rearranged and repainted to restore the coloring properties. The properties are designed in such a way that this rearranging and recoloring can be performed efficiently. The balancing of the tree is not perfect, but it is good enough to allow it to guarantee searching in $O(\log n)$ time.

Properties:

-

Each node is either red or black.

-

The root is black. This rule is sometimes omitted. Since the root can always be changed from red to black, but not necessarily vice versa, this rule has little effect on analysis.

-

All leaves (NIL) are black.

-

If a node is red, then both its children are black.

-

Every path from a given node to any of its descendant NIL nodes goes through the same number of black nodes.

2-3 Trees and B-Trees

A B-tree is a self-balancing tree data structure that maintains sorted data and allows searches, sequential access, insertions, and deletions in logarithmic time ($\mathcal{O}(\log n)$). The B-tree generalizes the binary search tree, allowing for nodes with more than two children and multiple keys. It is commonly used in databases and file systems.

The idea behind a B-tree is to collapse several levels of a binary search tree into a single large node containing multiple keys, so that we can make the equivalent of several search steps before another disk access is needed. This is because we utilize whole blocks of disk memory per level of B-tree when the CPU performs low-bandwidth reads from disk memory. This is in contrast to the CPU performing high-bandwidth reads of words from cache memory.

The branching factor B indicates the number of keys and children a

node may have. $$\begin{aligned}

B \leq \text{number of children} < 2B

B - 1 \leq \text{number of keys} < 2B - 1

\end{aligned}$$ A 2-3 tree is a B-tree with branching factor of 2. This means it has at most 2 keys and at most 3 children.

B-trees are constructed in a bottom-up way: values are inserted into a node based on binary search. If the node reaches its capacity based on the degree of the B-tree, then it is split in half with left or right bias and an appropriate root (median value) and children are selected and appointed to existing or new nodes.

For implementing multi-level indexing in a database, every node will have a key to be indexed by a pointer to its child nodes in their memory blocks as well as a pointer to a record on the database (value). In a B+ tree, only leaf nodes contain a record pointer with leaf nodes also containing a copy of corresponding parent keys.

Graphs

A graph is simply a collection of nodes, some of which may have edges between them. With this definition, we see that a tree is a connected graph that does not have cycles. Graphs can be either directed or undirected. A graph might consist of multiple isolated subgraphs. If there is a path between every pair of vertices, it is called a connected graph. A graph can also have cycles (or not), an acyclic graph is one without cycles. Note that a tree is undirected and acyclic, which differs from a directed and acyclic graph in which sibling nodes can be joined by edges in a diamond-like shape. There are two common ways to represent a graph: adjacency lists and adjacency matrices.

In an adjacency list representation, every vertex stores a list of adjacent vertices. In an undirected graph, an edge like $(a, b)$ would be stored twice: once in $a$’s adjacent vertices and once in $b$’s adjacent vertices. An adjacency list is faster and uses less space for sparse graphs and conversely, it will be slower and contain redundancies for dense graphs.

An adjacency matrix is an $N$x$N$ boolean matrix (where $N$ is the number of nodes), where a true value at $M_{i,j}$ indicates an edge from node $i$ to node $j$. (You can also use an integer matrix with $0$s and $1$s.) In an undirected graph, an adjacency matrix will be symmetric. In a directed graph, it will not (necessarily) be. An adjacency matrix will be faster for dense graphs and simpler for graphs with weighted edges, but it will use more space, always having $\mathcal{O}(V^2)$ space complexity.

Some questions to ask when deciding on a representation include:

-

How big will your graph be? – Adjacency matrices make sense only for small or very dense graphs.

-

How dense will your graph be? —- If your graph is very dense, meaning that a large fraction of the vertex pairs define edges, there is probably no compelling reason to use adjacency lists. You will be doomed to using $\Theta(n^2)$ space anyway. Indeed, for complete graphs, matrices will be more concise due to the elimination of pointers.

-

Which algorithms will you be implementing? – Certain algorithms are more natural on adjacency matrices (such as all-pairs shortest path) and others favor adjacency lists (such as most DFS-based algorithms). Adjacency matrices win for algorithms that repeatedly ask, “Is (i,j) in G?" However, most graph algorithms can be designed to eliminate such queries.

-

Will you be modifying the graph over the course of your application? – Efficient static graph implementations can be used when no edge insertion/deletion operations will done following initial construction. Indeed, more common than modifying the topology of the graph is modifying the attributes of a vertex or edge of the graph, such as size, weight, label, or color. Attributes are best handled as extra fields in the vertex or edge records of adjacency lists.

Planar graphs are those that can be drawn in the plane so no two edges cross. Planar graphs are always sparse, since any n-vertex planar graph can have at most $3n - 6$ edges, thus they should be represented using adjacency lists. Euler’s Formula states $v-e+f=2$ for all planar graphs, where numbers $v =$ vertices $e =$ edges, and $f =$ faces.

Python Implementation

``` {.python language=”Python”} edges = [[‘A’, ‘B’], [‘B’, ‘C’], [‘C’, ‘A’]]

Directed graph using an adjacency list

def construct(edges): g = collections.defaultdict(list) for source, target in edges: g[source].append(target)

Undirected graph using an adjacency list

def construct(edges): g = collections.defaultdict(set) for source, target in edges: g[source].add(target) g[target].add(source)

Undirected graph using an adjacency matrix

def construct(edges): nodes = set() for e in edges: nodes.update({e[0], e[1]}) n, ordering = len(nodes), list(nodes) g = [[0] * n for _ in range(n)] for source, target in edges: i, j = ordering.index(source), ordering.index(target) g[source][target] = 1

### Flow Networks

A flow network is a directed graph where each edge has a capacity and

each edge receives a flow, usually represented as a fraction

$flow_i/capacity_i$. A flow must satisfy the restriction that the amount

of flow into a node equals the amount of flow out of it, unless it is a

source $s$, which has only outgoing flow, or sink $t$, which has only

incoming flow. Often we are in search of the max flow of the network

which can be found using the **Ford--Fulkerson algorithm**. Or we may be

in search of a **bottleneck** node,

$$bottleneck = min(capacity_i - flow_i \ \ \forall i \text{ in the network}).$$

### Union-Find

A union--find data structure (a.k.a disjoint-set union or DSU) stores a

collection of non-overlapping sets. In a graph, a set can be thought of

as a tree, i.e. an acyclic and connected subgraph, making union-find a

quick method for determining if a graph contains cycles. Disjoint-set

data structures play a key role in **Kruskal's algorithm** for finding

the **minimum spanning tree** of a graph, which is a subset of the edges

of a connected, weighted, undirected graph that connects all the

vertices together, without any cycles and with the minimum possible

total edge weight. Union-find can also be used to keep track of the

connected components of an undirected graph. The data structure

maintains three operations,

1. **makeset(A)** -- Create a new size-1 set containing just element A.

2. **find(A)** -- Starts at A and returns A's tree root.

3. **union(A, B)** -- Finds the root for A and B using the find

operation, then sets B's parent to be A, combining the two trees

into one.

Two optimizations bring the amortized time for both the union and find

operations from $\mathcal{O}(\log N)$ close to $\mathcal{O}(1)$. The

find operation limits the number of repeated traversals by keeping track

of all the nodes along the path using **path compression**. This is done

by storing a reference to a node's parent in a list on the initial find

call for all nodes in the traversed path, where the list has size of the

node and since its index maps to specific node, we initialize the parent

to be itself. In a union operation, we want to ensure the larger set

remains the root to ensure the tree depth is minimised, this is known as

**union by rank**. To do this, we need to track the size of each set in

a list, again where indices correspond to a node.

To perform a sequence of $m$ addition, union, or find operations on a

disjoint-set forest with $N$ nodes requires total time

$\mathcal{O}(m \cdot \alpha(N))$, where $\alpha(N)$ is the extremely

slow-growing inverse Ackermann function, which is effectively considered

equal to $\mathcal{O}(1)$.

*Python Implementation*

``` {.python language="Python"}

class UnionFind:

def __init__(self, size):

self.parent = list(range(size))

# We use this to keep track of the size of each set.

self.rank = [0] * size

def find(self, x):

# recursive path compression

if x != self.parent[x]:

self.parent[x] = self.find(self.parent[x])

return self.parent[x]

def union(self, x, y):

# Find the parents for x and y.

px = self.find(x)

py = self.find(y)

# Check if they are already in the same set.

if px == py:

return False

# We want to ensure the larger set remains the root.

if self.rank[px] > self.rank[py]:

self.parent[py] = px

self.rank[px] += self.rank[py]

else:

self.parent[px] = py

self.rank[py] += max(1, self.rank[px])

# Return true if merge occurred

return True

def same_group(self, x, y):

return self.find(x) == self.find(y)

Algorithms and Techniques

Sequence Search and Sort

Binary Search

Time Complexity: $\mathcal{O}(\log n)$ average and worst case. Space Complexity: $\mathcal{O}(1)$

In binary search, we look for an element $x$ in a sorted array by first comparing $x$ to the midpoint of the array. If $x$ is less than the midpoint, then we search the left half of the array. If $x$ is greater than the midpoint, then we search the right half of the array. We then repeat this process, treating the left and right halves as subarrays. Again, we compare $x$ to the midpoint of this subarray and then search either its left or right side. We complete this process when we either find $x$ or the subarray has size 0.

In general, if we can discover some kind of monotonicity, i.e. if condition(index) is True then condition(index + 1) is also True, then we can consider binary search. In this sense, binary search can be thought of as the canonical example of a divide and conquer algorithm. Another notable example is local peak finding in one and two dimensional inputs, where we want to find a number $v$ such that $u < v < w$ where the numbers $u,v,w$ occur in that order. Instead of a linear scan in $\mathcal{O}(n)$, we start with the middle item of the array and compare it with its adjacent elements to determine increasing direction to recurse in, pruning half of the input with every step and finding a peak in $\mathcal{O}(\log n)$.

In some cases we can derive a lower and upper bound on our answer from our input, possibly by finding the sum and the min/max value. Instead of incrementally attempting values in the range of possible values, we should use binary search with backtracking to find a minimum or maximum valid solution.

Instead of searching for an exact target, we may want to return the index of next smallest or next largest index. To do this, we can remove the return on equivalence and return our left pointer instead of -1 after the while loop terminates. For finding the next largest index, we also use an equivalence in the $\geq$ comparator conditional for moving our left pointer forward.

Python Implementation

``` {.python language=”Python”} def binary_search(nums, target): if len(nums) == 0: return -1 left, right = 0, len(nums) - 1 while left <= right: mid = (left + right) // 2 if nums[mid] == target: return mid elif nums[mid] < target: left = mid + 1 else: right = mid - 1 return -1

A = [-14, -10, 2, 108, 108, 243, 285, 285, 285, 401]

Bisect module implements most binary search use cases

import bisect

insert into sorted array while maintaining order in O(n)

bisect.insort(A, 3)

return index of first occurence of target element O(logn)

bisect.bisect_left(A, -10)

return index to the right of last occurence of target element O(logn)

bisect.bisect_right(A, -10)

def search_leftmost(nums, target): lo, hi = 0, len(nums) - 1 while lo <= hi: mid = (lo + hi) // 2 if target > nums[mid]: lo = mid + 1 else: # Equivalence moves search range to the left hi = mid - 1 return lo